A page from the "Causes of Color" exhibit...

How does the brain interpret color?

Intricate layers and connections of nerve cells in the retina were drawn by the famed Spanish anatomist Santiago Ramón y Cajal around 1900. Rod and cone cells are at the top. Optic nerve fibers leading to the brain may be seen at the bottom right.

Our ability to see color is something most of us take for granted, yet it is a highly complex process that begs the question of whether the "red" or "blue" we see is the same "red" or "blue" that others see.

How do we differentiate wavelengths?

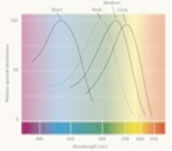

Typically, humans have three different types of cones with photo-pigments that sense three different portions of the spectrum. Each cone is tuned to perceive primarily long wavelengths (sometimes called red), middle wavelengths (sometimes called green), or short wavelengths (sometimes called blue), referred to as L-, M-, and S- cones respectively. The peak sensitivities are provided by three different photo-pigments. Light at any wavelength in the visual spectrum (ranging from 400 to 700 nm) will excite one or more of these three types of sensors. Our mind determines the color by comparing the different signals each cone senses.

Colorblindness results when either one photo-pigment is missing, or two happen to be the same. See the Colorblind page for more detail. Interestingly, there is a variation among people with full color vision. Could the faint variations of color perceptions among people with full color vision account for differences in aesthetic taste?

Individual cones signal the rate at which they absorb photons, without regard to photon wavelengths. Though photons of different wavelengths have a different probability of absorption, the wavelength does not change the resulting neural effect once it has been absorbed. Single photoreceptors transmit no information about the wavelengths of the photons that they absorb. Our ability to perceive color depends upon comparisons of the outputs of the three cone types, each with different spectral sensitivity. These comparisons are made by the neural circuitry of the retina.

Sensitivity of the different cones to varying wavelengths. The response varies by wavelength for each kind of receptor. For example, the medium cone is more sensitive to pure green wavelengths than to red wavelengths.

How is the neural signal physically generated?

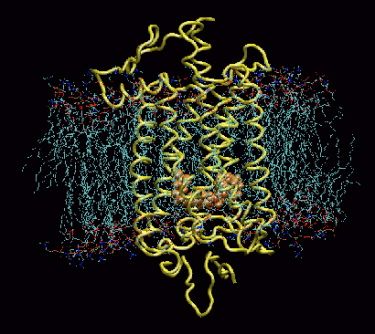

The retina contains millions of specialized photoreceptor cells known as rods and cones. Within these receptors are membranes. The membranes contain visual pigments that absorb light and undergo chemical changes that trigger an electrical signal. The visual pigments for both cones and rods are similar in that they consist of retinene joined at both ends to retinal proteins called opsins.

Rhodopsin is the retinal protein that is found in the rod cells in the eye and that is responsible for our night vision. The three types of cone cells contain slightly different opsins, which form the basis for color vision. These three types of cone opsins account for the differences in peak wavelength absorption for each pigment. The physical difference between types of opsin can be as small as a few amino acids.

Model of rhodopsin protein from a cow. The rhodopsin (yellow) is embedded in the membrane of the rod cell. Retinal is shown in orange. When light enters the eye, photons cause the retinene to rearrange, switching from 11-cis-retinal to 11-trans-retinal (isomerization). When this happens, the straight end of the retinene breaks loose from the opsin, and the rhodopsin opens a portal in the nerve for a fraction of a second, and a nerve pulse is initiated. Moments later, the portal closes, as the retinene transforms back to the cis form, reattaching to an opsin to regenerate the pigment.

Where does the signal go when it reaches the brain?

How is color determined? The signal from the retina is analyzed by nerve cells (retinal ganglion cells), which compare the stimulation of neighboring cones, and calculate whether the light reaching a patch of cones is more blue-or-yellow, and red-or-green. Next, the signal travels to the brain where it is divided into several pathways - like fiber optics branching throughout the cortex. For example, visual signals from the photoreceptors pass to retinal ganglion cells, which code color information, and then to the lateral geniculate nucleus (LGN) in the thalamus, and onwards to the primary visual cortex. The primary visual cortex (known as V1) preserves the spatial relationships of images on the retina. This property is called retinoptic organization.

Studies of the brain

Beginning around 1970, researchers began seriously to study the visual brain. One of the chief discoveries is that it is composed of many different visual areas that surround the primary visual cortex (V1).

Anatomically, the color pathways are relatively well charted. In the monkey, they involve areas V1, V2, V4 and the infero-temporal cortex. A similar pathway is involved in the human brain; imaging studies show that V1, V4 and areas located within the fusiform gyrus in the medial temporal lobe are activated by colored stimuli.

The visible brain consists of multiple functionally specialized areas that receive their input largely from V1 (yellow) and the area surrounding it, known as V2 (green). These are currently the most thoroughly charted visual areas, but not the only ones. Other visual areas are continually being discovered.

Each group of areas is specialized to process a particular attribute of the visual environment by virtue of the specialized signals it receives. Cells specialized for a given attribute, such as motion or color, are grouped together in anatomically identifiable compartments within V1, with different compartments connecting with different visual areas outside V3. Each compartment confers its specializations on the corresponding visual area.

V1 acts as a post office, distributing different signals to different destinations; it is just the first, vital stage in an elaborate mechanism designed to extract essential information from the visual world. What we now call the visual brain is therefore V1 in combination with the specialized visual areas with which it connects either directly or indirectly. Parallel systems are devoted to processing different attributes of the visual world simultaneously, each system consisting of the specialized cells in V1 plus the specialized areas to which these cells project. In other words, vision is modular. Researchers have long debated why a strategy has evolved to process the different attributes of the visual world in parallel. The most plausible explanation is that we need to discount certain kinds of information in order to acquire knowledge about different attributes. With color, it is the precise wavelength composition of the light reflected from a surface that has to be discounted; with size, the precise viewing distance must be ignored; and with form, the viewing angle must become irrelevant.

Recent evidence has shown that the processing systems are also perceptual systems: activity in each can result in a percept independent of the other systems. Each processing-perceptual system has a slightly different processing duration, and reaches its perceptual end-point at a slightly different time from the others. There is a perceptual asynchrony in vision, as color is seen before form, which is seen before motion. Color is processed ahead of motion by a time difference in the order of 60-100 ms. This means that visual perception is also modular. The visual brain is characterized by a set of parallel processing perceptual systems, and a temporal hierarchy in visual perception.

The eye alone does not tell the story

In order for visual processing to develop and function properly, the brain must be visually nourished at critical periods after birth. Numerous clinical and physiological studies have shown that individuals who are born blind and to whom vision is later restored find it very difficult, if not impossible, to learn to see even rudimentary forms.

In 1910, for example, the surgeons Moreau and Le Prince wrote about their successful operation on an eight-year-old boy who had been blind since birth because of cataracts. Following the operation, they were anxious to discover how he could see. But when they removed the bandages from his physically perfect eyes, they were confused and disappointed. They waved a hand in front of the boy’s eyes and asked him what he saw. The boy replied meekly, "I don’t know." He only saw a vague change in brightness; he did not know it was a moving hand. Not until he was allowed to touch the hand did he exclaim, "It’s moving!" Without visual input during his early development, the boy had never developed the physiological stage of visual processing that is necessary for vision. The optical stage provides the raw message, but it is the physiological stage that determines what can be seen.